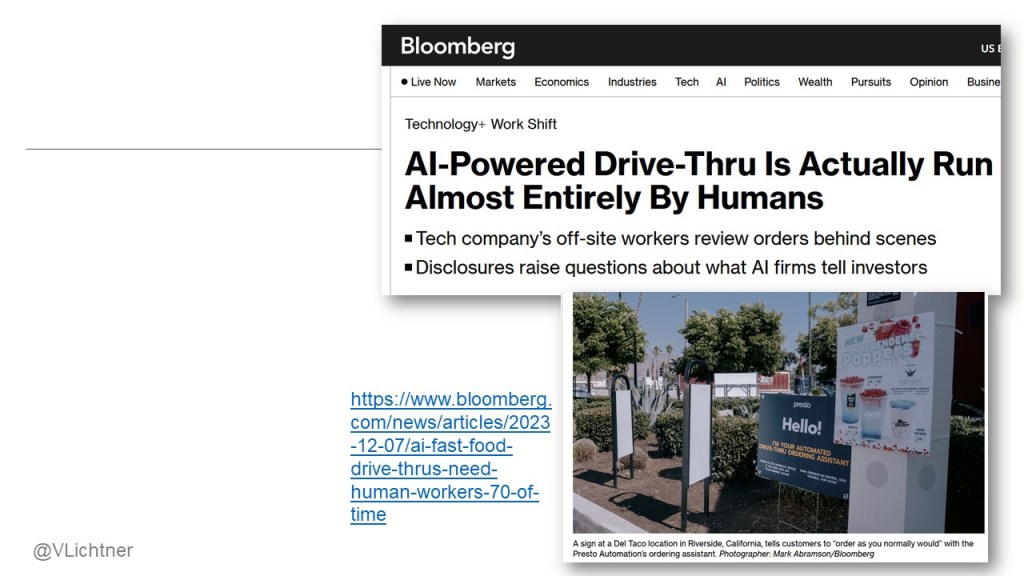

My thanks to the wonderful students taking my module on human-centred AI this past semester. Had fun uncovering the plentiful, resourceful, often unrecognised, human work behind AI – such as… apparently-autonomous cars, apparently-autonomous drive-in fast food systems, apparently-automated data moderation.

We discussed human-centred approaches in the AI life cycle – AI risks and ethical questions.

Large language models (LLMs) and machine learning (ML) systems have brought the non-neutrality of technology centre-stage, in what is known as the field of AI ethics (Dubber 2020).

Langdon Winner (1980), in a seminal paper entitled ‘Do artifacts have politics?’, draws our attention to the non-neutral properties of artefacts such as tools or road infrastructures, and how they shape human behaviours, ‘engineering relationships among people’ (Winner, 1980, p.124). Either intentionally or not, technology design reflects the values, preferences, beliefs, of developers and implementers, as well as the underlying socio-economic systems that make them possible. Values of developers may influence for example, the choice of what guardrails to embed in ML systems; and profit-driven business models, and anti-competitive market structures and practices underpin LLMs (Vipra and West, 2023).

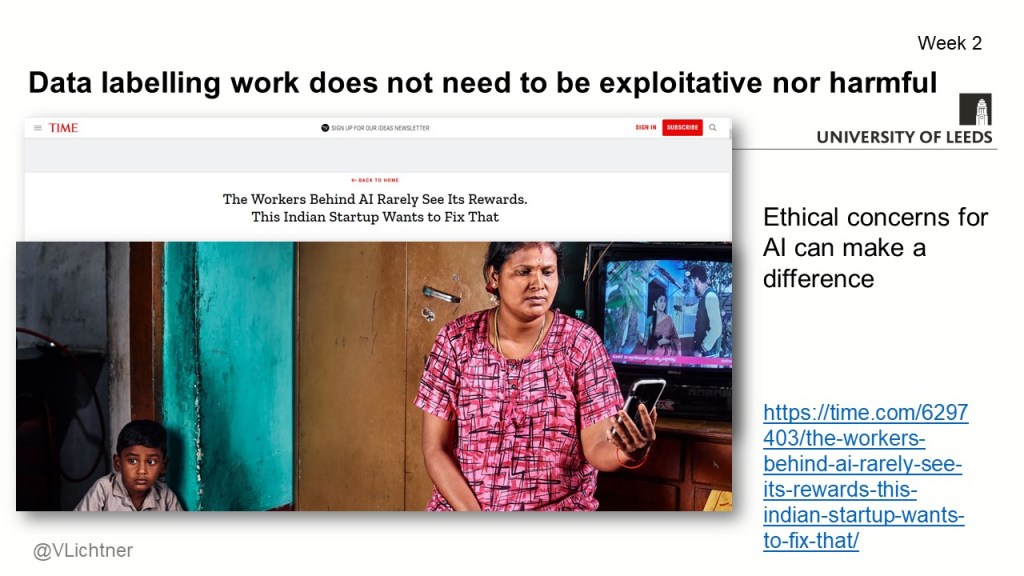

Society’s values are also embedded in the ‘raw’ materials (Gitelman, 2013) used for developing the AI: the datasets. These datasets are created through sociotechnical work systems that embody values and relationships (Gitelman 2013). AI systems trained on these data automatically reproduce those values and relationships, often involving biases, stereotypes and inequality (Crawford,2021).

Design and adoption of AI presents then ethical questions, and asking those questions opens up possibilities for redress, possibly also aided by the right regulation (Davies and Birtwistle 2023); or to paraphrase Langdon Winner, when ethical issues are raised to public attention, it becomes evident that remedies and re-designs are required (Winner 1980, p125).

The syllabus - 5973M Human-Centred AI for 2023-24.

It was said at the start….

Upon completion of this module students will be able to:

1. Identify key ethical issues associated with the use of AI

2. Demonstrate knowledge and critical understanding of theories, approaches and methods applied to the development of human-centred AI

3. Critically evaluate the strengths and drawbacks of different AI systems

4. Develop and critically assess strategies to manage AI in organisations, and the associated challenges

5. Provide informed personal reflections on the consequences of AI for society and the future of work

Week 1 – Introduction to the module, and foundations on AI (ML and LLM).

Week 2 – Data reliance, data labelling and data work

Week 3 – AI ethics and transparency

Week 4 – Technology is not neutral. AI regulation.

Guest lecture: ethical AI with Magali Goirand

Week 5 – Guidance for the assignment, requiring a commentary to a ChatGPT generated text

Week 6 – Human-centred design: user-centred design principles, applied to LLMs

Week 7 – reading week!

Week 8 – Discussion of a practice essay, and of the human work behind AI.

Week 9 – From design to adoption: human centred AI in organizations, and the theory of sociotechnical systems. AI audits.

Week 10 – AI hype. AI winters. Zombies*: professor David Woods recorded lecture.

Week 11 – Wrap-up, and cineforum (Wall-E).

We also had four workshops, including two role-play activities adapted from materials provided by Georgia Institute of Technology ** : a local council deciding on the implementation of autonomous buses (see also Edinburgh’s AV buses initiative), and a university deciding whether to use AI in admissions. One workshop was based on Mattia Dessi’s research in South Africa’s mining industry. Thanks Mattia!

Note: No LLM has been used to aid the design of this module (at least not knowingly!).

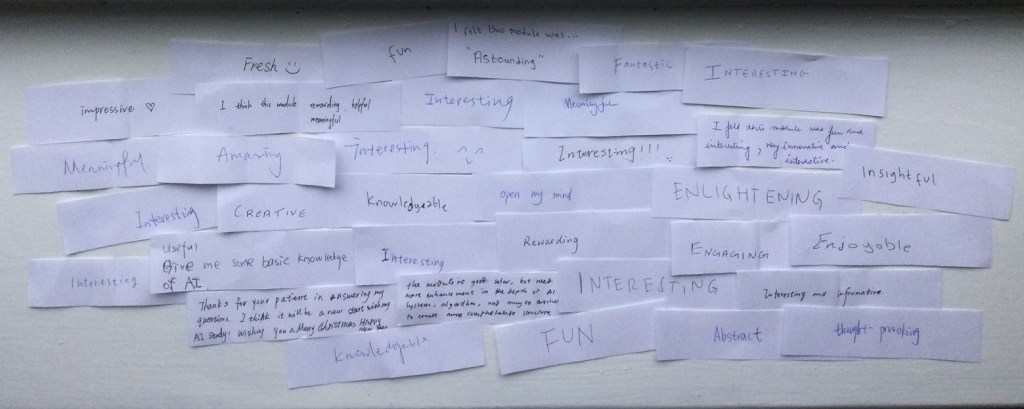

Student feedback to LUBS5973M 2023-24

‘amazing’, ‘astounding’, ‘fresh’, ‘impressive’, ‘meaningful’, ‘enlightening’, ‘open my mind’, ‘fun’, ‘novel’, ‘interesting’, ‘exciting’

Reading list (books)

Artificial unintelligence: how computers misunderstand the world. Meredith Broussard. MIT Press, 2018

The atlas of AI: power, politics, and the planetary costs of artificial intelligence. Kate Crawford, Yale University Press, 2021

The shortcut: why intelligent machines do not think like us. Nello Cristianini, CRC Press 2023.

A Practical Guide to Building Ethical AI, Reid Blackman 2020

A wide range of other materials were shared with the students each week – too wide to mention all here!

Footnotes

* Zombies: ‘myths about the relationship between people and technology that get in the way of designing safe systems for human purposes’ (Prof David Woods)

** Georgia Institute of Technology (US): Embedding Ethics in CS Classes Through Role Play (2023), by Ellen Zegura, Jason Borenstein, Benjamin Shapiro, Amanda Meng, and Emma Logevall, with support by the Mozilla Responsible Computer Science Challenge, funded by the Mozilla Foundation, the Omidyar Network, Schmidt Futures, and Craig Newmark Philanthropies. Materials and more information available at: https://sites.gatech.edu/responsiblecomputerscience/

References

Crawford, K. (2021). The atlas of AI: Power, politics, and the planetary costs of artificial intelligence. Yale University Press.

Dubber, MD., Pasquale F., and Sunit D. (eds) (2020) The Oxford Handbook of Ethics of AI (online edn), Oxford Academic, Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190067397.001.0001

Gitelman, L. (Ed.). (2013). Raw data is an oxymoron. MIT press.

Lattimore et al (2020) Technical Paper, Australian Human Rights Commission. https://humanrights.gov.au/our-work/rights-and-freedoms/publications/using-artificial-intelligence-make-decisions-addressing

OpenAI (2023). ChatGPT3.5 [Large language model]. https://chat.openai.com/

Vipra J and West S.M. (2023) Computational Power and AI. [Report] AINow. Available at: https://ainowinstitute.org/publication/policy/compute-and-ai

Winner L (1980) Do Artifacts Have Politics?. Daedalus, 109(1) 121-136.

Davies M. and Birtwistle M (2023) Regulating AI in the UK. Strengthening the UK’s proposals for the benefit of people and society [Report] Ada Lovelace Institute. https://www.adalovelaceinstitute.org/report/regulating-ai-in-the-uk/